Why Python Dominates AI Despite Its Parallel Processing Problem#

A 62-year-old programmer’s “aha!” moment about the hidden architecture of modern AI.

I was brushing up on my trigonometry the other day (yes, at 62, we never stop learning) when nostalgia hit me like a wave. There I was, muttering “Some People Have Curly Brown Hair Through Proper Brushing”—the mnemonic for SOHCAHTOA I learned decades ago during my FSc classes at the Junior Cadet College of the Pakistan Navy in Manora.

Those were different times. Young cadets in crisp uniforms, the Arabian Sea breeze carrying the scent of salt and ambition, and mathematics that seemed abstract then but would prove foundational for everything that followed. Who knew a trigonometry lesson from my naval cadet days would unlock a key insight about modern AI?

As I worked through cos(θ) = 0.6 to find that familiar 53.13° angle, the answer to a question that had been bugging me for months suddenly clicked into place:

Why does AI use Python when it’s so bad at parallel processing?

Think about it. Modern AI models have billions of parameters. Every prediction involves massive parallel computations across thousands of processing units. Yet the entire field runs on Python—a language famously hobbled by its Global Interpreter Lock (GIL), which prevents it from running multiple threads on multiple CPU cores simultaneously.

This hits close to home. I’d finally mastered Go, falling in love with its elegant simplicity and powerful concurrency model that makes parallel programming feel natural rather than terrifying. Go felt like the future of systems programming, and I was riding that wave.

Then AI exploded, and suddenly everyone was talking about Python again. The irony wasn’t lost on me—just when I’d found my groove with Go’s superpowers, the industry zigged back to Python for the most parallel-intensive workload imaginable. But learning never stops. And sometimes, the journey back teaches you more than the journey forward ever could.

The “What, Not How” Revelation#

Then it hit me with the force of that trigonometry breakthrough. The solution to the AI paradox follows the “What, Not How” principle, an idea I first encountered in C.J. Date’s 2003 book, What Not How: The Business Rules Approach to Application Development, while on an IRS assignment with CSC.

The connection felt profound. Here I was, a former naval cadet in his 60s, connecting dots between trigonometry mnemonics from Manora, business rules from the early 2000s, and today’s AI revolution.

Date was talking about declarative programming—building applications by stating WHAT needs to be done, not HOW to do it. Twenty years later, the exact same architectural pattern dominates AI.

Python tells the system WHAT to do. C++, Rust, and CUDA tell it HOW to do it fast.

It’s declarative programming all over again, just with neural networks instead of business rules.

The Beautiful Deception: The Wrapper and the Engine#

Here’s what’s really happening when you write this innocent-looking Python code:

# Python says "WHAT" to do — it's clean and readable

import torch

predictions = model(input_data)

result = torch.nn.functional.softmax(predictions, dim=-1)

Python isn’t doing the heavy lifting. It’s the conductor of an orchestra where the musicians are high-performance engines written in C++, CUDA, and increasingly, Rust. Underneath the hood, a completely different conversation is happening:

// C++/CUDA says "HOW" to do it — optimized and parallel

__global__ void softmax_kernel(float* input, float* output, int size) {

// Launch thousands of GPU threads in parallel.

// Use hand-optimized memory access patterns.

// ...

}

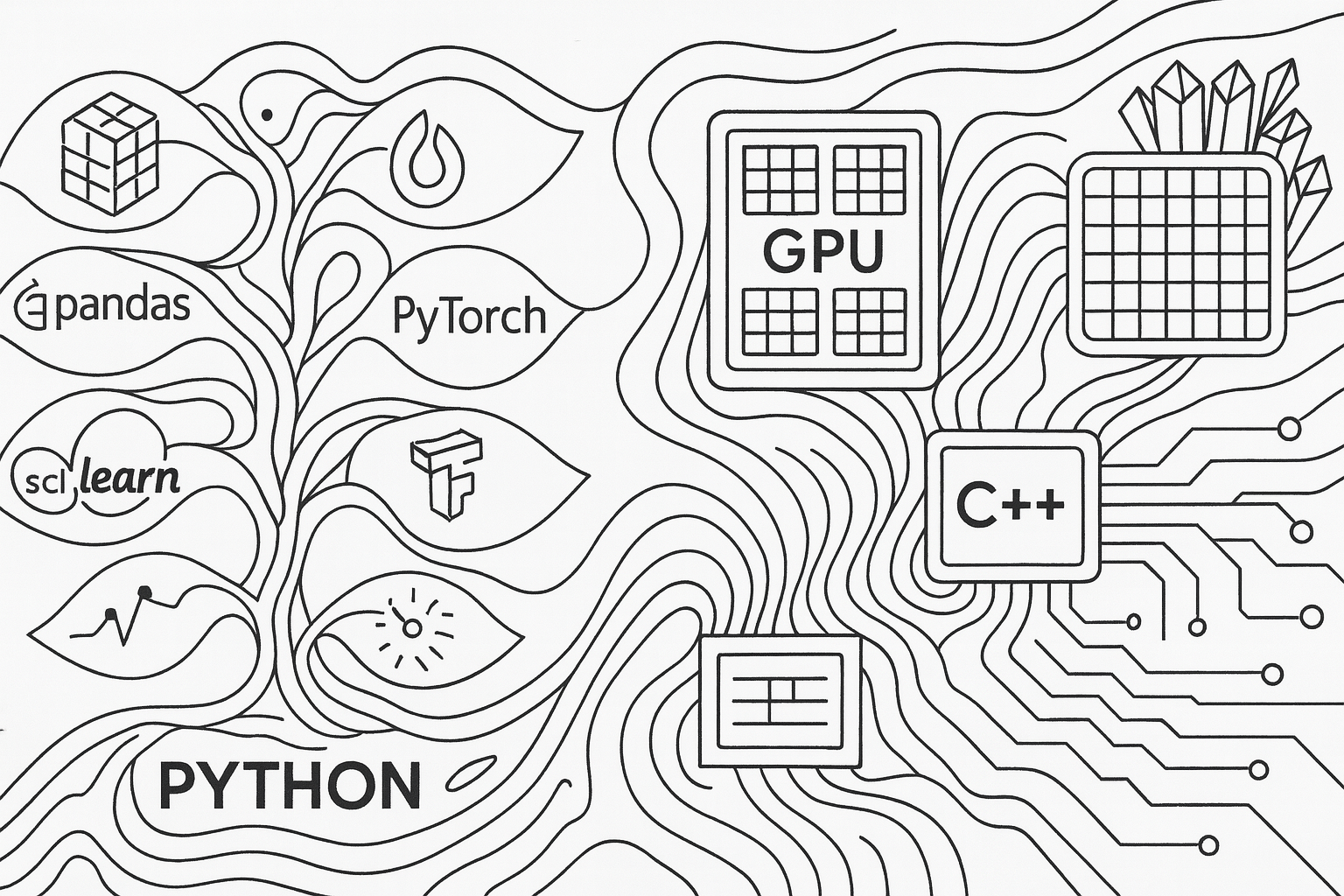

The AI stack is layered by design:

- Python (The Conductor): The high-level orchestration layer. It says, “Train this model using these layers and this data.”

- PyTorch / TensorFlow (The Engine): The C++/CUDA backend that translates Python’s commands into brutally efficient, parallelized operations.

- GPU / CPU (The Hardware): Thousands of cores that execute the low-level instructions.

Why Not Go for AI?#

Don’t get me wrong—Go is fantastic. It excels at web services, distributed systems, and parallel CPU processing. But AI’s needs are different. It requires:

- Massive GPU Parallelism: The core of deep learning, which Go isn’t designed to manage directly.

- A Rich Research Ecosystem: A vast collection of battle-tested libraries like NumPy and SciPy that have been perfected over decades.

- Rapid Prototyping: The simple, flexible syntax that allows researchers to experiment at high speed.

Go is excellent for building the infrastructure around AI systems, but Python owns the modeling pipeline itself.

This Pattern is Everywhere#

Once you see this separation of “What” from “How,” you can’t unsee it. It’s a fundamental principle of modern computing:

- You write SQL → A C++ database engine executes the query.

- You write JavaScript → The V8 engine (written in C++) runs it.

- You see a website → A C++ rendering engine like Blink or WebKit paints the pixels.

- You run Python ML code → A C++/Rust library crunches the numbers.

The newest member of the “How” club is Rust, with frameworks like Candle and Burn offering memory safety alongside extreme performance. But the pattern holds: a high-level language for intent, a systems language for execution.

The Wisdom of Separation#

This architecture works because it plays to each language’s strengths. It creates a perfect division of labor:

- Researchers & Data Scientists think in Python’s clean abstractions.

- Systems Engineers optimize performance primitives in C++, Rust, and CUDA.

- Everyone Wins, achieving both high productivity and extreme performance.

It’s like having a brilliant manager (Python) who can clearly communicate a vision to a team of hyper-efficient specialists (C++/Rust). The manager doesn’t need to know how to write SIMD instructions; they just need to know what to ask for.

The next time someone asks why AI doesn’t use a “faster” language, you can tell them: it does. Python is just the visible tip of a very powerful, compiled iceberg.

C.J. Date was right. The future is about declaring what we want, not programming how to achieve it. From the shores of Manora to the frontiers of artificial intelligence, this principle endures.

What do you think? Have you noticed this “What, Not How” pattern in other domains? If you enjoyed this perspective, you can follow my work and get in touch at khalidrizvi.com . I’m always happy to connect with fellow travelers on this incredible learning journey.