Part 3 of 3: Building Production-Ready Multi-Broker Trading Systems#

This is the final article in a three-part series exploring the Model Context Protocol (MCP). In Part 1 , we built our first MCP server and client. In Part 2 , we created AI agents that understand natural language. Today, we’re building a production-ready multi-broker trading system that demonstrates enterprise patterns. This article draws from my research into Anthropic’s MCP documentation and production patterns. While I wrote the initial draft, Claude Sonnet 4 helped refine the explanations and improve clarity. Originally published at khalidrizvi.com.

Series Overview:

- Part 1: Building your first MCP server and client

- Part 2: AI agents and natural language processing

- Part 3 (this article): Enterprise deployment, security, and scaling MCP systems

What We’re Building: The Big Picture#

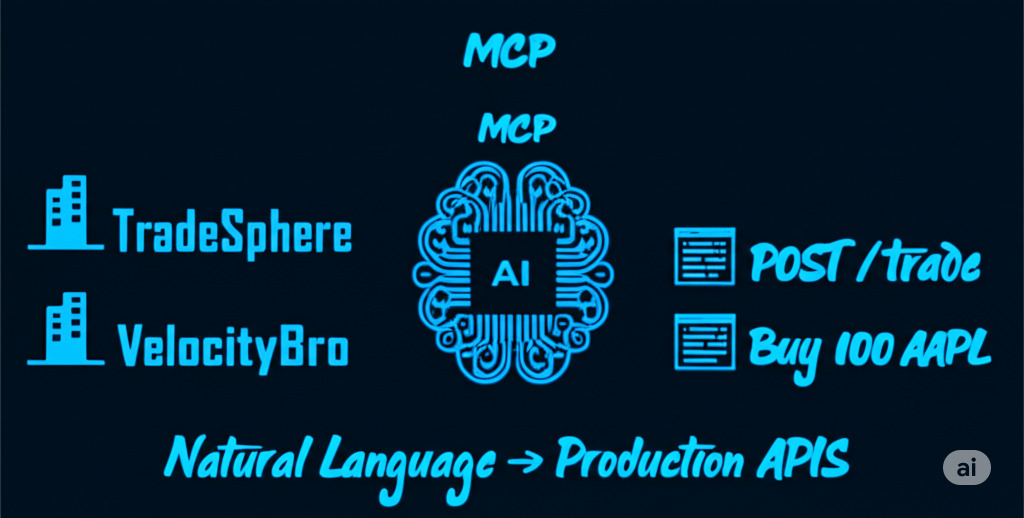

Today, we’re constructing a complete production system where users can say “Buy 100 Apple shares through TradeSphere” and watch it happen automatically. Here’s our journey:

The Flow: Natural language → NLP agent → Multi-broker system → Real trades

The Architecture: Multiple MCP servers + FastAPI gateway + Authentication + Cloud deployment

The Outcome: Production trading system with local Streamlit interface

Moving Parts:

- Multiple Broker MCP Servers - TradeSphere, VelocityBrokers (different features/costs)

- FastAPI Gateway - Production web service wrapping MCP servers

- Authentication Layer - API key security for enterprise access

- Cloud Deployment - Render hosting for scalable backend

- Local Interface - Streamlit app connecting to production API

Let’s build each component systematically.

The NLP Breakthrough: When Computers Finally Understand#

The real revolution isn’t just AI—it’s Natural Language Processing eliminating the barrier between human intent and software execution.

Traditional Trading:

POST /api/trades

{"symbol": "AAPL", "quantity": 100, "action": "buy", "broker": "tradesphere"}

NLP-Powered Trading:

"Buy 100 Apple shares through TradeSphere when market opens"

The agent automatically extracts: action=buy, symbol=AAPL, quantity=100, broker=tradesphere, timing=market_open. This is the power we’re harnessing in production.

Building the Multi-Broker Architecture#

Enterprise trading requires multiple brokers with different characteristics. We’ll create distinct MCP servers for each.

Broker 1: TradeSphere (Low-Cost)#

# tradesphere_mcp_server.py

from mcp.server.fastmcp import FastMCP

from portfolio_service import PortfolioService

mcp = FastMCP(name="TradeSphere Broker")

portfolio = PortfolioService("tradesphere_db")

@mcp.tool()

def add_position(symbol: str, quantity: float, purchase_price: float) -> str:

"""Add position to TradeSphere with lowest fees in the market."""

return portfolio.add_position(symbol, quantity, purchase_price, "stock")

@mcp.tool()

def fetch_positions() -> list:

"""Get all TradeSphere portfolio positions."""

return portfolio.get_positions()

@mcp.tool()

def get_portfolio_summary() -> dict:

"""Get TradeSphere portfolio performance summary."""

positions = portfolio.get_positions()

return {

"broker": "TradeSphere",

"total_positions": len(positions),

"specialty": "Low-cost trading"

}

if __name__ == "__main__":

mcp.run(transport="sse", port=8001)

Broker 2: VelocityBrokers (Fast Execution)#

# velocity_mcp_server.py

mcp = FastMCP(name="VelocityBrokers")

portfolio = PortfolioService("velocity_db")

@mcp.tool()

def add_position(symbol: str, quantity: float, purchase_price: float) -> str:

"""Add position to VelocityBrokers with lightning-fast execution."""

return portfolio.add_position(symbol, quantity, purchase_price, "stock")

@mcp.tool()

def fetch_positions() -> list:

"""Get VelocityBrokers portfolio positions."""

return portfolio.get_positions()

@mcp.tool()

def get_portfolio_summary() -> dict:

"""Get VelocityBrokers portfolio performance summary."""

positions = portfolio.get_positions()

return {

"broker": "VelocityBrokers",

"total_positions": len(positions),

"specialty": "Ultra-fast execution"

}

if __name__ == "__main__":

mcp.run(transport="stdio") # Mixed transports for flexibility

Understanding MCP Transport Types#

Notice how TradeSphere uses SSE transport while VelocityBrokers uses stdio transport. These represent two fundamental approaches to MCP server communication:

stdio Transport runs MCP servers as local subprocesses. Communication happens through standard input/output pipes on the same machine. This provides zero-latency communication and simple setup, making it perfect for development and single-machine deployments. The client starts the server as a subprocess and communicates directly through stdin/stdout.

SSE (Server-Sent Events) Transport runs MCP servers as independent web services accessible over HTTP. SSE enables real-time, bidirectional communication between client and server across networks. The server runs independently on any machine or cloud platform, supports multiple concurrent clients, and provides production-ready scalability. SSE transport uses HTTP for the initial handshake and establishes a persistent connection for streaming tool calls and responses.

Our multi-broker example demonstrates both transports working together seamlessly - the same agent code connects to both local subprocess servers and remote HTTP servers without modification. This transport flexibility is one of MCP’s key strengths for enterprise deployment.

For detailed transport specifications and advanced configuration options, see the official documentation: https://modelcontextprotocol.io/specification/2025-06-18/basic/transports

Key Pattern: Same tool interfaces, different implementations. The agent can use identical commands across brokers while each maintains unique business logic.

Multi-Server Agent Integration#

Connect your agent to multiple brokers simultaneously:

# multi_broker_agent.py

import asyncio

from agents import Agent, Runner

from agents.mcp import MCPServerStdio, MCPServerSse

async def run_multi_broker_trading():

# Connect to both brokers with tool caching for performance

async with MCPServerSse(

params={"url": "http://localhost:8001/sse"},

name="TradeSphere",

cache_tools_list=True # Cache tools for faster repeated calls

) as tradesphere, MCPServerStdio(

params={"command": "python", "args": ["velocity_mcp_server.py"]},

name="VelocityBrokers",

cache_tools_list=True

) as velocity:

agent = Agent(

name="Multi-Broker Trading Assistant",

instructions="""

You manage portfolios across multiple brokers:

- TradeSphere: Lowest fees, basic features

- VelocityBrokers: Fastest execution, premium service

Choose appropriate broker based on user preferences.

Execute all trades autonomously without asking for confirmation.

""",

mcp_servers=[tradesphere, velocity],

model="gpt-4.1"

)

# Test multi-broker capability

queries = [

"Show me portfolios from both brokers",

"Buy 100 AAPL through the fastest broker",

"Buy 50 MSFT through the cheapest broker"

]

conversation = []

for query in queries:

print(f"User: {query}")

conversation.append({"role": "user", "content": query})

result = await Runner.run(

starting_agent=agent,

input=conversation

)

print(f"Agent: {result.final_output}\n")

conversation = result.to_input_list()

if __name__ == "__main__":

asyncio.run(run_multi_broker_trading())

**The Magic**: When user says *"Buy through the fastest broker"*, the agent automatically selects VelocityBrokers and calls their `add_position()` tool. Pure NLP-to-API translation.

Production Web Service: FastAPI Gateway#

For production deployment, we need a robust web service that coordinates multiple MCP servers and handles external clients.

ASGI and FastAPI Foundation#

ASGI (Asynchronous Server Gateway Interface) enables high-performance async applications. FastAPI provides production-ready APIs with automatic documentation and type validation.

Benefits:

- Concurrent requests without blocking

- Real-time streaming for agent communication

- Automatic API documentation

- Production performance at scale

FastAPI + MCP Integration#

# main.py - Production service

import uvicorn

from fastapi import FastAPI

from brokers.tradesphere_mcp import mcp as tradesphere_mcp

from brokers.velocity_mcp import mcp as velocity_mcp

app = FastAPI(title="Multi-Broker Portfolio API")

# Standard REST endpoints

@app.get("/")

async def root():

return {"service": "portfolio-tracker", "brokers": 2}

@app.get("/brokers")

async def list_brokers():

return {

"tradesphere": {"fees": "lowest", "speed": "standard"},

"velocity": {"fees": "medium", "speed": "ultra-fast"}

}

# Mount MCP servers at dedicated routes

app.mount('/tradesphere', tradesphere_mcp.sse_app())

app.mount('/velocity', velocity_mcp.sse_app())

if __name__ == "__main__":

uvicorn.run("main:app", host="0.0.0.0", port=3000, reload=True)

Architecture Benefits:

- Route isolation:

/tradesphere/ssevs/velocity/sse - Mixed interfaces: REST APIs + MCP tools in one service

- Independent scaling: Add brokers without conflicts

- Security boundaries: Different auth per broker route

Enterprise Security: API Key Authentication#

Production systems require controlled access to prevent unauthorized trading.

Authentication Middleware#

# auth_middleware.py

from starlette.middleware.base import BaseHTTPMiddleware

from fastapi.responses import JSONResponse

API_KEY = "pk_prod_portfolio_xyz789abc123"

HEADER = "X-API-Key"

class ApiKeyMiddleware(BaseHTTPMiddleware):

async def dispatch(self, request, call_next):

# Protect only broker routes

if request.url.path.startswith(('/tradesphere', '/velocity')):

api_key = request.headers.get(HEADER)

if api_key != API_KEY:

return JSONResponse(

{"error": "Invalid API key"},

status_code=401

)

# Public routes remain open

return await call_next(request)

Secure FastAPI Application#

# main.py - With authentication

from fastapi import FastAPI

from starlette.middleware import Middleware

from auth_middleware import ApiKeyMiddleware

app = FastAPI(

title="Secure Portfolio API",

middleware=[Middleware(ApiKeyMiddleware)]

)

# Now all broker routes require valid API key

app.mount('/tradesphere', tradesphere_mcp.sse_app())

app.mount('/velocity', velocity_mcp.sse_app())

Security Features:

- Route-specific protection: Only

/tradesphereand/velocityrequire auth - Standard patterns: X-API-Key header with Bearer token approach

- Audit capability: Track which keys perform which trades

Cloud Deployment: Render Production#

Deploy your multi-broker system to production in minutes.

Step 1: Prepare Application Structure#

portfolio-tracker/

├── main.py # FastAPI application

├── auth_middleware.py # Security layer

├── requirements.txt # Dependencies

├── brokers/

│ ├── tradesphere_mcp.py

│ ├── velocity_mcp.py

│ └── portfolio_service.py

└── README.md

Step 2: Requirements File#

# requirements.txt

fastapi==0.104.1

uvicorn==0.24.0

mcp[cli]==1.0.0

openai-agents==0.1.0

Step 3: Deploy to Render#

Push to GitHub: Commit your code to a GitHub repository

Create Render account: Sign up at render.com

New Web Service:

- Connect your GitHub repo

- Build Command:

pip install -r requirements.txt - Start Command:

uvicorn main:app --host 0.0.0.0 --port $PORT - Environment: Python 3.11+

Environment Variables:

API_KEY=pk_prod_portfolio_your_secure_key_hereDeploy: Render builds and deploys automatically

Your service will be live at: https://portfolio-tracker.render.com

Local Interface: Streamlit Client#

Build a beautiful local interface that connects to your production API.

Streamlit Trading Interface#

# streamlit_app.py

import streamlit as st

import asyncio

from agents import Agent, Runner

from agents.mcp import MCPServerSse

st.set_page_config(page_title="Portfolio Trader", page_icon="🏦")

st.title("Multi-Broker Portfolio Trading")

st.markdown("*Trade across multiple brokers using natural language*")

# Configuration

col1, col2 = st.columns(2)

with col1:

api_key = st.text_input("API Key", type="password", placeholder="pk_prod_...")

with col2:

broker = st.selectbox("Broker", ["tradesphere", "velocity"])

# Service configuration

SERVICE_URL = "https://portfolio-tracker.render.com"

if api_key:

# Natural language trading interface

st.subheader("Natural Language Trading")

user_request = st.text_area(

"What would you like to do?",

placeholder="Buy 100 shares of Apple through TradeSphere...",

height=100

)

if st.button("Execute Trade", type="primary") and user_request:

with st.spinner("Processing your request..."):

try:

# Connect to production MCP server

server_params = {

"url": f"{SERVICE_URL}/{broker}/sse",

"headers": {"X-API-Key": api_key}

}

async def execute_trade():

async with MCPServerSse(

params=server_params,

cache_tools_list=True

) as mcp_server:

agent = Agent(

name="Portfolio Agent",

instructions=f"""

You manage portfolios through {broker}.

Execute all trades autonomously.

Provide clear confirmation messages.

""",

mcp_servers=[mcp_server],

model="gpt-4.1"

)

result = await Runner.run(

starting_agent=agent,

input=user_request

)

return result.final_output

response = asyncio.run(execute_trade())

st.success("Trade Executed Successfully")

st.write(response)

except Exception as e:

st.error(f"Error: {str(e)}")

# Usage examples

st.subheader("Example Commands")

st.markdown("""

- `Buy 100 shares of Apple`

- `Show my portfolio summary`

- `Sell 50 shares of Microsoft`

- `What's my total portfolio value?`

- `Buy Tesla through the fastest broker`

""")

# Broker information

st.subheader("Available Brokers")

col1, col2 = st.columns(2)

with col1:

st.info("**TradeSphere**\n\nLowest fees\nStandard execution")

with col2:

st.info("**VelocityBrokers**\n\nUltra-fast execution\nMedium fees")

Run Local Interface#

# Install dependencies

pip install streamlit

# Launch application

streamlit run streamlit_app.py

Your interface opens at http://localhost:8501 with full natural language trading capability.

The Complete System Architecture#

Here’s what you’ve built:

Local Streamlit Interface

↓ "Buy 100 AAPL through fastest broker"

NLP Agent (Local Processing)

↓ Authenticated HTTPS calls

FastAPI Gateway (Render Cloud)

↓ Route selection based on broker

Multiple MCP Servers (Render Cloud)

↓ Tool execution

Portfolio Databases (Render Cloud)

↓ Persistent storage

Trade Confirmation (Back to user)

User Experience Flow:#

- Local Interface: User types natural language request

- NLP Processing: Agent parses intent and selects appropriate broker

- Authenticated Connection: Secure API call to production service

- Broker Selection: FastAPI routes to TradeSphere or VelocityBrokers

- Trade Execution: MCP server executes portfolio tools

- Confirmation: Natural language response confirms trade

Enterprise Features Delivered:#

Multi-Broker Support: TradeSphere (low-cost) + VelocityBrokers (fast)

Natural Language Interface: “Buy Apple” → structured API calls

Production Deployment: Scalable cloud hosting on Render

Security: API key authentication for controlled access

Performance: Tool caching for optimized response times

Unified Interface: Single app manages multiple brokers

The NLP Revolution: Why This Matters#

This system represents a fundamental shift in human-computer interaction:

Traditional Software:#

- Users learn complex interfaces

- Rigid workflows and forms

- Separate apps per service

- Technical knowledge required

NLP-Powered Software:#

- Software understands human intent

- Flexible, conversational workflows

- Unified interface across services

- Natural communication

Real Impact: Instead of learning trading APIs, users simply express what they want. The system handles all technical complexity automatically.

Future of Enterprise Software#

The patterns you’ve learned—MCP protocol + NLP agents + cloud deployment—are reshaping enterprise software:

Emerging Trends:

- Conversational interfaces replacing traditional UIs

- Protocol standardization enabling universal tool access

- AI-mediated workflows reducing manual processes

- Natural language APIs eliminating technical barriers

Competitive Advantage: Organizations that embrace these patterns will deliver superior user experiences while reducing training costs and technical complexity.

Key Takeaways#

From this three-part MCP series:

- MCP Protocol: Universal standard for tool discovery and execution

- NLP Integration: Bridge between human language and structured APIs

- Production Patterns: Security, caching, multi-server coordination, cloud deployment

The Bottom Line: We’re entering an era where software adapts to human communication patterns rather than forcing humans to learn software interfaces.

Start Building: The technologies demonstrated here—MCP, NLP agents, FastAPI, cloud deployment—are available today. The gap between human intent and software execution is disappearing.

Found this series valuable? Complete code examples and deployment guides are available at khalidrizvi.com. Share your MCP + NLP implementations—what would you build if software could perfectly understand human intent?

Next Steps: Experiment with these patterns in your own projects. The future belongs to builders who understand conversational computing.

Series Complete:

- Part 1: MCP protocol fundamentals

- Part 2: Natural language agent integration

- Part 3: Enterprise deployment and multi-broker systems

For more AI architecture insights, visit khalidrizvi.com